In the news today - someone says a system may have feelings. Sounds impressive right? The trouble is folk have been making AI (and/or machine learning) claims for as long as I can remember, and it's all been snake oil in my opinion.

To me AI is just sufficiently complicated programming that may start to appear somewhat intelligent. But there's no actual intelligence. Machine learning is just bigger datasets being processed by better algorithms. But there's no conscious learning.

Unfortunately I think these fancy sounding terms are mostly used to justify academic technology expenditures and to make business CVs look way better than they should.

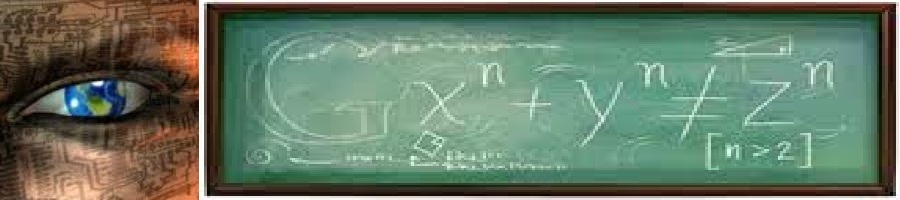

However, for me, this topic raises an important question about ourselves instead. Is our sense of self not an illusion too? Is it not really a sufficiently complicated set of parallel feedback processes interacting with a large memory containing our backstory, logic principles and linguistic constructs? With enough monitoring of our internal processes and stored experiences a sense of self begins to emerge.

Our senses tell us a lot about the world, but they're never perfect. Nor are our mental models of the world ideal, hence we make many mistakes. In reality we're made up of lots of overlapping pains and pleasures, sharp and soft, as well as fuzzy facts and yes, it feels like, erm, feelings....